Option object to configure Paddle Inference backend. More...

#include <option.h>

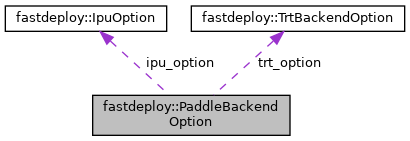

Collaboration diagram for fastdeploy::PaddleBackendOption:

Public Member Functions | |

| void | DisableTrtOps (const std::vector< std::string > &ops) |

| Disable type of operators run on TensorRT. | |

| void | DeletePass (const std::string &pass_name) |

| Delete pass by name. | |

Public Attributes | |

| bool | enable_log_info = false |

| Print log information while initialize Paddle Inference backend. | |

| bool | enable_mkldnn = true |

| Enable MKLDNN while inference on CPU. | |

| bool | enable_trt = false |

| Use Paddle Inference + TensorRT to inference model on GPU. | |

| bool | enable_memory_optimize = true |

| Whether enable memory optimize, default true. | |

| bool | switch_ir_debug = false |

| Whether enable ir debug, default false. | |

| bool | collect_trt_shape = false |

| Collect shape for model while enable_trt is true. | |

| int | mkldnn_cache_size = -1 |

| Cache input shape for mkldnn while the input data will change dynamiclly. | |

| int | gpu_mem_init_size = 100 |

| initialize memory size(MB) for GPU | |

| bool | enable_fixed_size_opt = false |

| The option to enable fixed size optimization for transformer model. | |

Detailed Description

Option object to configure Paddle Inference backend.

The documentation for this struct was generated from the following file:

- /fastdeploy/my_work/FastDeploy/fastdeploy/runtime/backends/paddle/option.h

1.8.13

1.8.13