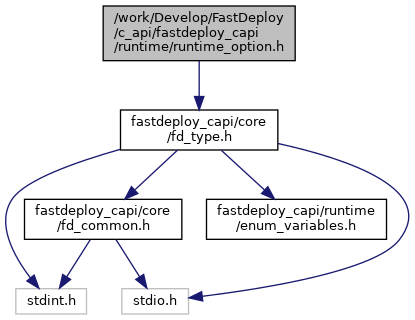

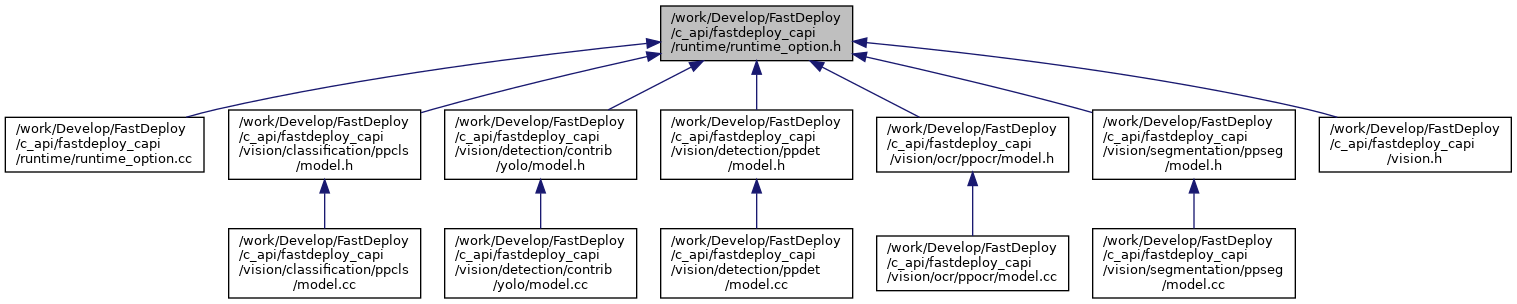

#include "fastdeploy_capi/core/fd_type.h"

Go to the source code of this file.

Typedefs | |

| typedef struct FD_C_RuntimeOptionWrapper | FD_C_RuntimeOptionWrapper |

Functions | |

| FASTDEPLOY_CAPI_EXPORT __fd_give FD_C_RuntimeOptionWrapper * | FD_C_CreateRuntimeOptionWrapper () |

| Create a new FD_C_RuntimeOptionWrapper object. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_DestroyRuntimeOptionWrapper (__fd_take FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Destroy a FD_C_RuntimeOptionWrapper object. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetModelPath (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *model_path, const char *params_path, const FD_C_ModelFormat format) |

| Set path of model file and parameter file. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetModelBuffer (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *model_buffer, const char *params_buffer, const FD_C_ModelFormat) |

| Specify the memory buffer of model and parameter. Used when model and params are loaded directly from memory. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseCpu (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Use cpu to inference, the runtime will inference on CPU by default. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseGpu (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, int gpu_id) |

| Use Nvidia GPU to inference. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseRKNPU2 (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, FD_C_rknpu2_CpuName rknpu2_name, FD_C_rknpu2_CoreMask rknpu2_core) |

| Use RKNPU2 to inference. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseTimVX (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Use TimVX to inference. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseAscend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Use Huawei Ascend to inference. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseKunlunXin (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, int kunlunxin_id, int l3_workspace_size, FD_C_Bool locked, FD_C_Bool autotune, const char *autotune_file, const char *precision, FD_C_Bool adaptive_seqlen, FD_C_Bool enable_multi_stream) |

| Turn on KunlunXin XPU. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseSophgo (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetExternalStream (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, void *external_stream) |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetCpuThreadNum (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, int thread_num) |

| Set number of cpu threads while inference on CPU, by default it will decided by the different backends. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetOrtGraphOptLevel (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, int level) |

| Set ORT graph opt level, default is decide by ONNX Runtime itself. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUsePaddleBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Set Paddle Inference as inference backend, support CPU/GPU. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUsePaddleInferBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Wrapper function of UsePaddleBackend() More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseOrtBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Set ONNX Runtime as inference backend, support CPU/GPU. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseSophgoBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Set SOPHGO Runtime as inference backend, support CPU/GPU. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseTrtBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Set TensorRT as inference backend, only support GPU. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUsePorosBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Set Poros backend as inference backend, support CPU/GPU. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseOpenVINOBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Set OpenVINO as inference backend, only support CPU. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseLiteBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Set Paddle Lite as inference backend, only support arm cpu. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUsePaddleLiteBackend (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Wrapper function of UseLiteBackend() More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetPaddleMKLDNN (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, FD_C_Bool pd_mkldnn) |

| Set mkldnn switch while using Paddle Inference as inference backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperEnablePaddleToTrt (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| If TensorRT backend is used, EnablePaddleToTrt will change to use Paddle Inference backend, and use its integrated TensorRT instead. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperDeletePaddleBackendPass (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *delete_pass_name) |

| Delete pass by name while using Paddle Inference as inference backend, this can be called multiple times to delete a set of passes. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperEnablePaddleLogInfo (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Enable print debug information while using Paddle Inference as inference backend, the backend disable the debug information by default. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperDisablePaddleLogInfo (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Disable print debug information while using Paddle Inference as inference backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetPaddleMKLDNNCacheSize (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, int size) |

| Set shape cache size while using Paddle Inference with mkldnn, by default it will cache all the difference shape. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetOpenVINODevice (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *name) |

| Set device name for OpenVINO, default 'CPU', can also be 'AUTO', 'GPU', 'GPU.1'.... More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetLiteOptimizedModelDir (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *optimized_model_dir) |

| Set optimzed model dir for Paddle Lite backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetLiteSubgraphPartitionPath (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *nnadapter_subgraph_partition_config_path) |

| Set subgraph partition path for Paddle Lite backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetLiteSubgraphPartitionConfigBuffer (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *nnadapter_subgraph_partition_config_buffer) |

| Set subgraph partition path for Paddle Lite backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetLiteContextProperties (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *nnadapter_context_properties) |

| Set context properties for Paddle Lite backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetLiteModelCacheDir (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *nnadapter_model_cache_dir) |

| Set model cache dir for Paddle Lite backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetLiteMixedPrecisionQuantizationConfigPath (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *nnadapter_mixed_precision_quantization_config_path) |

| Set mixed precision quantization config path for Paddle Lite backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperEnableLiteFP16 (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| enable half precision while use paddle lite backend More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperDisableLiteFP16 (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| disable half precision, change to full precision(float32) More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperEnableLiteInt8 (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| enable int8 precision while use paddle lite backend More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperDisableLiteInt8 (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| disable int8 precision, change to full precision(float32) More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetLitePowerMode (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, FD_C_LitePowerMode mode) |

| Set power mode while using Paddle Lite as inference backend, mode(0: LITE_POWER_HIGH; 1: LITE_POWER_LOW; 2: LITE_POWER_FULL; 3: LITE_POWER_NO_BIND, 4: LITE_POWER_RAND_HIGH; 5: LITE_POWER_RAND_LOW, refer paddle lite for more details) More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperEnableTrtFP16 (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Enable FP16 inference while using TensorRT backend. Notice: not all the GPU device support FP16, on those device doesn't support FP16, FastDeploy will fallback to FP32 automaticly. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperDisableTrtFP16 (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Disable FP16 inference while using TensorRT backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetTrtCacheFile (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, const char *cache_file_path) |

Set cache file path while use TensorRT backend. Loadding a Paddle/ONNX model and initialize TensorRT will take a long time, by this interface it will save the tensorrt engine to cache_file_path, and load it directly while execute the code again. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperEnablePinnedMemory (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Enable pinned memory. Pinned memory can be utilized to speedup the data transfer between CPU and GPU. Currently it's only suppurted in TRT backend and Paddle Inference backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperDisablePinnedMemory (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Disable pinned memory. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperEnablePaddleTrtCollectShape (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Enable to collect shape in paddle trt backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperDisablePaddleTrtCollectShape (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper) |

| Disable to collect shape in paddle trt backend. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperSetOpenVINOStreams (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, int num_streams) |

| Set number of streams by the OpenVINO backends. More... | |

| FASTDEPLOY_CAPI_EXPORT void | FD_C_RuntimeOptionWrapperUseIpu (__fd_keep FD_C_RuntimeOptionWrapper *fd_c_runtime_option_wrapper, int device_num, int micro_batch_size, FD_C_Bool enable_pipelining, int batches_per_step) |

| Graphcore IPU to inference. More... | |

Typedef Documentation

◆ FD_C_RuntimeOptionWrapper

| typedef struct FD_C_RuntimeOptionWrapper FD_C_RuntimeOptionWrapper |

Function Documentation

◆ FD_C_CreateRuntimeOptionWrapper()

| FASTDEPLOY_CAPI_EXPORT __fd_give FD_C_RuntimeOptionWrapper* FD_C_CreateRuntimeOptionWrapper | ( | ) |

Create a new FD_C_RuntimeOptionWrapper object.

- Returns

- Return a pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_DestroyRuntimeOptionWrapper()

| FASTDEPLOY_CAPI_EXPORT void FD_C_DestroyRuntimeOptionWrapper | ( | __fd_take FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Destroy a FD_C_RuntimeOptionWrapper object.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperDeletePaddleBackendPass()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperDeletePaddleBackendPass | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | delete_pass_name | ||

| ) |

Delete pass by name while using Paddle Inference as inference backend, this can be called multiple times to delete a set of passes.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] delete_pass_name pass name

◆ FD_C_RuntimeOptionWrapperDisableLiteFP16()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperDisableLiteFP16 | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

disable half precision, change to full precision(float32)

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperDisableLiteInt8()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperDisableLiteInt8 | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

disable int8 precision, change to full precision(float32)

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperDisablePaddleLogInfo()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperDisablePaddleLogInfo | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Disable print debug information while using Paddle Inference as inference backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperDisablePaddleTrtCollectShape()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperDisablePaddleTrtCollectShape | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Disable to collect shape in paddle trt backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperDisablePinnedMemory()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperDisablePinnedMemory | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Disable pinned memory.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperDisableTrtFP16()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperDisableTrtFP16 | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Disable FP16 inference while using TensorRT backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperEnableLiteFP16()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperEnableLiteFP16 | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

enable half precision while use paddle lite backend

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperEnableLiteInt8()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperEnableLiteInt8 | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

enable int8 precision while use paddle lite backend

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperEnablePaddleLogInfo()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperEnablePaddleLogInfo | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Enable print debug information while using Paddle Inference as inference backend, the backend disable the debug information by default.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperEnablePaddleToTrt()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperEnablePaddleToTrt | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

If TensorRT backend is used, EnablePaddleToTrt will change to use Paddle Inference backend, and use its integrated TensorRT instead.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperEnablePaddleTrtCollectShape()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperEnablePaddleTrtCollectShape | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Enable to collect shape in paddle trt backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperEnablePinnedMemory()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperEnablePinnedMemory | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Enable pinned memory. Pinned memory can be utilized to speedup the data transfer between CPU and GPU. Currently it's only suppurted in TRT backend and Paddle Inference backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperEnableTrtFP16()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperEnableTrtFP16 | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Enable FP16 inference while using TensorRT backend. Notice: not all the GPU device support FP16, on those device doesn't support FP16, FastDeploy will fallback to FP32 automaticly.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperSetCpuThreadNum()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetCpuThreadNum | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| int | thread_num | ||

| ) |

Set number of cpu threads while inference on CPU, by default it will decided by the different backends.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] thread_num number of threads

◆ FD_C_RuntimeOptionWrapperSetExternalStream()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetExternalStream | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| void * | external_stream | ||

| ) |

◆ FD_C_RuntimeOptionWrapperSetLiteContextProperties()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetLiteContextProperties | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | nnadapter_context_properties | ||

| ) |

Set context properties for Paddle Lite backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] nnadapter_context_properties context properties

◆ FD_C_RuntimeOptionWrapperSetLiteMixedPrecisionQuantizationConfigPath()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetLiteMixedPrecisionQuantizationConfigPath | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | nnadapter_mixed_precision_quantization_config_path | ||

| ) |

Set mixed precision quantization config path for Paddle Lite backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] nnadapter_mixed_precision_quantization_config_path mixed precision quantization config path

◆ FD_C_RuntimeOptionWrapperSetLiteModelCacheDir()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetLiteModelCacheDir | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | nnadapter_model_cache_dir | ||

| ) |

Set model cache dir for Paddle Lite backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] nnadapter_model_cache_dir model cache dir

◆ FD_C_RuntimeOptionWrapperSetLiteOptimizedModelDir()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetLiteOptimizedModelDir | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | optimized_model_dir | ||

| ) |

Set optimzed model dir for Paddle Lite backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] optimized_model_dir optimzed model dir

◆ FD_C_RuntimeOptionWrapperSetLitePowerMode()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetLitePowerMode | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| FD_C_LitePowerMode | mode | ||

| ) |

Set power mode while using Paddle Lite as inference backend, mode(0: LITE_POWER_HIGH; 1: LITE_POWER_LOW; 2: LITE_POWER_FULL; 3: LITE_POWER_NO_BIND, 4: LITE_POWER_RAND_HIGH; 5: LITE_POWER_RAND_LOW, refer paddle lite for more details)

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] mode power mode

◆ FD_C_RuntimeOptionWrapperSetLiteSubgraphPartitionConfigBuffer()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetLiteSubgraphPartitionConfigBuffer | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | nnadapter_subgraph_partition_config_buffer | ||

| ) |

Set subgraph partition path for Paddle Lite backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] nnadapter_subgraph_partition_config_buffer subgraph partition path

◆ FD_C_RuntimeOptionWrapperSetLiteSubgraphPartitionPath()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetLiteSubgraphPartitionPath | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | nnadapter_subgraph_partition_config_path | ||

| ) |

Set subgraph partition path for Paddle Lite backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] nnadapter_subgraph_partition_config_path subgraph partition path

◆ FD_C_RuntimeOptionWrapperSetModelBuffer()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetModelBuffer | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | model_buffer, | ||

| const char * | params_buffer, | ||

| const FD_C_ModelFormat | |||

| ) |

Specify the memory buffer of model and parameter. Used when model and params are loaded directly from memory.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] model_buffer The memory buffer of model [in] params_buffer The memory buffer of the combined parameters file [in] format Format of the loaded model

◆ FD_C_RuntimeOptionWrapperSetModelPath()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetModelPath | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | model_path, | ||

| const char * | params_path, | ||

| const FD_C_ModelFormat | format | ||

| ) |

Set path of model file and parameter file.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] model_path Path of model file, e.g ResNet50/model.pdmodel for Paddle format model / ResNet50/model.onnx for ONNX format model [in] params_path Path of parameter file, this only used when the model format is Paddle, e.g Resnet50/model.pdiparams [in] format Format of the loaded model

◆ FD_C_RuntimeOptionWrapperSetOpenVINODevice()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetOpenVINODevice | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | name | ||

| ) |

Set device name for OpenVINO, default 'CPU', can also be 'AUTO', 'GPU', 'GPU.1'....

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] name device name

◆ FD_C_RuntimeOptionWrapperSetOpenVINOStreams()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetOpenVINOStreams | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| int | num_streams | ||

| ) |

Set number of streams by the OpenVINO backends.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] num_streams number of streams

◆ FD_C_RuntimeOptionWrapperSetOrtGraphOptLevel()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetOrtGraphOptLevel | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| int | level | ||

| ) |

Set ORT graph opt level, default is decide by ONNX Runtime itself.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] level optimization level

◆ FD_C_RuntimeOptionWrapperSetPaddleMKLDNN()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetPaddleMKLDNN | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| FD_C_Bool | pd_mkldnn | ||

| ) |

Set mkldnn switch while using Paddle Inference as inference backend.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] pd_mkldnn whether to use mkldnn

◆ FD_C_RuntimeOptionWrapperSetPaddleMKLDNNCacheSize()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetPaddleMKLDNNCacheSize | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| int | size | ||

| ) |

Set shape cache size while using Paddle Inference with mkldnn, by default it will cache all the difference shape.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] size cache size

◆ FD_C_RuntimeOptionWrapperSetTrtCacheFile()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperSetTrtCacheFile | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| const char * | cache_file_path | ||

| ) |

Set cache file path while use TensorRT backend. Loadding a Paddle/ONNX model and initialize TensorRT will take a long time, by this interface it will save the tensorrt engine to cache_file_path, and load it directly while execute the code again.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] cache_file_path cache file path

◆ FD_C_RuntimeOptionWrapperUseAscend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseAscend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Use Huawei Ascend to inference.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseCpu()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseCpu | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Use cpu to inference, the runtime will inference on CPU by default.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseGpu()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseGpu | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| int | gpu_id | ||

| ) |

Use Nvidia GPU to inference.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseIpu()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseIpu | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| int | device_num, | ||

| int | micro_batch_size, | ||

| FD_C_Bool | enable_pipelining, | ||

| int | batches_per_step | ||

| ) |

Graphcore IPU to inference.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] device_num the number of IPUs. [in] micro_batch_size the batch size in the graph, only work when graph has no batch shape info. [in] enable_pipelining enable pipelining. [in] batches_per_step the number of batches per run in pipelining.

◆ FD_C_RuntimeOptionWrapperUseKunlunXin()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseKunlunXin | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| int | kunlunxin_id, | ||

| int | l3_workspace_size, | ||

| FD_C_Bool | locked, | ||

| FD_C_Bool | autotune, | ||

| const char * | autotune_file, | ||

| const char * | precision, | ||

| FD_C_Bool | adaptive_seqlen, | ||

| FD_C_Bool | enable_multi_stream | ||

| ) |

Turn on KunlunXin XPU.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] kunlunxin_id the KunlunXin XPU card to use (default is 0). [in] l3_workspace_size The size of the video memory allocated by the l3 cache, the maximum is 16M. [in] locked Whether the allocated L3 cache can be locked. If false, it means that the L3 cache is not locked, and the allocated L3 cache can be shared by multiple models, and multiple models sharing the L3 cache will be executed sequentially on the card. [in] autotune Whether to autotune the conv operator in the model. If true, when the conv operator of a certain dimension is executed for the first time, it will automatically search for a better algorithm to improve the performance of subsequent conv operators of the same dimension. [in] autotune_file Specify the path of the autotune file. If autotune_file is specified, the algorithm specified in the file will be used and autotune will not be performed again. [in] precision Calculation accuracy of multi_encoder [in] adaptive_seqlen Is the input of multi_encoder variable length [in] enable_multi_stream Whether to enable the multi stream of KunlunXin XPU.

◆ FD_C_RuntimeOptionWrapperUseLiteBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseLiteBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Set Paddle Lite as inference backend, only support arm cpu.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseOpenVINOBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseOpenVINOBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Set OpenVINO as inference backend, only support CPU.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseOrtBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseOrtBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Set ONNX Runtime as inference backend, support CPU/GPU.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUsePaddleBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUsePaddleBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Set Paddle Inference as inference backend, support CPU/GPU.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUsePaddleInferBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUsePaddleInferBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Wrapper function of UsePaddleBackend()

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUsePaddleLiteBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUsePaddleLiteBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Wrapper function of UseLiteBackend()

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUsePorosBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUsePorosBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Set Poros backend as inference backend, support CPU/GPU.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseRKNPU2()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseRKNPU2 | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper, |

| FD_C_rknpu2_CpuName | rknpu2_name, | ||

| FD_C_rknpu2_CoreMask | rknpu2_core | ||

| ) |

Use RKNPU2 to inference.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object [in] rknpu2_name CpuName enum value [in] rknpu2_core CoreMask enum value

◆ FD_C_RuntimeOptionWrapperUseSophgo()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseSophgo | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Use Sophgo to inference

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseSophgoBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseSophgoBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Set SOPHGO Runtime as inference backend, support CPU/GPU.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseTimVX()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseTimVX | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Use TimVX to inference.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

◆ FD_C_RuntimeOptionWrapperUseTrtBackend()

| FASTDEPLOY_CAPI_EXPORT void FD_C_RuntimeOptionWrapperUseTrtBackend | ( | __fd_keep FD_C_RuntimeOptionWrapper * | fd_c_runtime_option_wrapper | ) |

Set TensorRT as inference backend, only support GPU.

- Parameters

-

[in] fd_c_runtime_option_wrapper pointer to FD_C_RuntimeOptionWrapper object

1.8.13

1.8.13